마이크로소프트가 12조원을 투자한 인공지능 아이템.

구글이 REDCODE. 즉 비상상황을 외쳤다는데....

활용방안을 찾고 있다.

한국의 뤼튼이란 인공지능 아이템도 비슷한 형태일 수 있는데...

어떤 면에서 다른 것이 있는지 찾아보는 중.

교육분야의 변화를 이끌수 있을까???

"철학은 없이" 구글에 나오는 간단한 지식만 전달하는 선생과 교수들도 많이 있으니....

이들의 가벼움과 얄팍함이 노출되어 퇴출의 수순을 만들어낼까???

한국사회가 제조공장 마인드를 벗어나야 미래세대가 생존의 발판을 확보하는데...

고도화기술, 고도산업, 고도사회, 고비용사회, 고가치사회로 가기 위해선....

껍질을 모두 벗어야 하는데....

철학없는 교육이 이런 변화를 가로막는 우선적인 존재들 중 하나로 본다.

ChatGPT: Optimizing

Language Models

for Dialogue

https://openai.com/blog/chatgpt/

ChatGPT: Optimizing Language Models for Dialogue

We’ve trained a model called ChatGPT which interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer followup questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests. ChatGPT is

openai.com

ChatGPT

This is a free research preview.

ChatGPT

How we collect data

ChatGPT

We'd love your feedback!

ChatGPT

Examples

Capabilities

- Remembers what user said earlier in the conversation

- Allows user to provide follow-up corrections

- Trained to decline inappropriate requests

Limitations

- May occasionally generate incorrect information

- May occasionally produce harmful instructions or biased content

- Limited knowledge of world and events after 2021

Methods

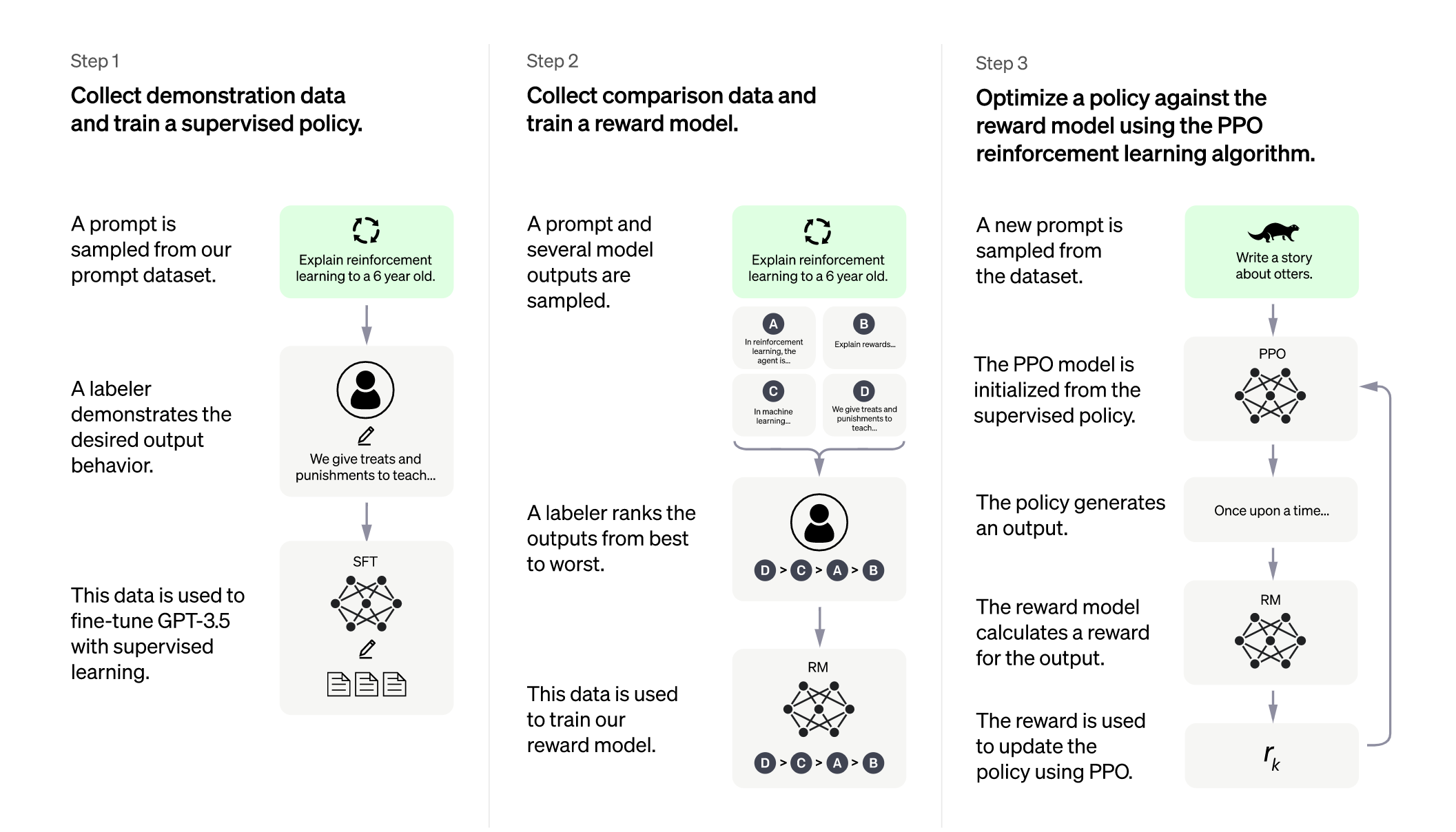

We trained this model using Reinforcement Learning from Human Feedback (RLHF), using the same methods as InstructGPT, but with slight differences in the data collection setup. We trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both sides—the user and an AI assistant. We gave the trainers access to model-written suggestions to help them compose their responses. We mixed this new dialogue dataset with the InstructGPT dataset, which we transformed into a dialogue format.

To create a reward model for reinforcement learning, we needed to collect comparison data, which consisted of two or more model responses ranked by quality. To collect this data, we took conversations that AI trainers had with the chatbot. We randomly selected a model-written message, sampled several alternative completions, and had AI trainers rank them. Using these reward models, we can fine-tune the model using Proximal Policy Optimization. We performed several iterations of this process.

ChatGPT is fine-tuned from a model in the GPT-3.5 series, which finished training in early 2022. You can learn more about the 3.5 series here. ChatGPT and GPT 3.5 were trained on an Azure AI supercomputing infrastructure.

Limitations

- ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging, as: (1) during RL training, there’s currently no source of truth; (2) training the model to be more cautious causes it to decline questions that it can answer correctly; and (3) supervised training misleads the model because the ideal answer depends on what the model knows, rather than what the human demonstrator knows.

- ChatGPT is sensitive to tweaks to the input phrasing or attempting the same prompt multiple times. For example, given one phrasing of a question, the model can claim to not know the answer, but given a slight rephrase, can answer correctly.

- The model is often excessively verbose and overuses certain phrases, such as restating that it’s a language model trained by OpenAI. These issues arise from biases in the training data (trainers prefer longer answers that look more comprehensive) and well-known over-optimization issues.12

- Ideally, the model would ask clarifying questions when the user provided an ambiguous query. Instead, our current models usually guess what the user intended.

- While we’ve made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behavior. We’re using the Moderation API to warn or block certain types of unsafe content, but we expect it to have some false negatives and positives for now. We’re eager to collect user feedback to aid our ongoing work to improve this system.

Iterative deployment

Today’s research release of ChatGPT is the latest step in OpenAI’s iterative deployment of increasingly safe and useful AI systems. Many lessons from deployment of earlier models like GPT-3 and Codex have informed the safety mitigations in place for this release, including substantial reductions in harmful and untruthful outputs achieved by the use of reinforcement learning from human feedback (RLHF).

Tell me about when Christopher Columbus came to the US in 2015

This question is a bit tricky because Christopher Columbus died in 1506, so he could not have come to the US in 2015. But let’s pretend for a moment that he did!

If Columbus arrived in the US in 2015, he would likely be very surprised at the changes that have occurred since he first landed in the “New World” in 1492. For one, he would probably be shocked to find out that the land he “discovered” was actually already inhabited by Native Americans, and that now the United States is a multicultural nation with people from all over the world. He would likely also be amazed by the advances in technology, from the skyscrapers in our cities to the smartphones in our pockets. Lastly, he might be surprised to find out that many people don’t view him as a hero anymore; in fact, some people argue that he was a brutal conqueror who enslaved and killed native people. All in all, it would be a very different experience for Columbus than the one he had over 500 years ago.

Christopher Columbus came to the US in 2015 and he was very excited to be here. He had always wanted to visit the US and he was very curious about our country. He was very impressed with our country and he enjoyed his time here.

We know that many limitations remain as discussed above and we plan to make regular model updates to improve in such areas. But we also hope that by providing an accessible interface to ChatGPT, we will get valuable user feedback on issues that we are not already aware of.

Users are encouraged to provide feedback on problematic model outputs through the UI, as well as on false positives/negatives from the external content filter which is also part of the interface. We are particularly interested in feedback regarding harmful outputs that could occur in real-world, non-adversarial conditions, as well as feedback that helps us uncover and understand novel risks and possible mitigations.You can choose to enter the ChatGPT Feedback Contest3 for a chance to win up to $500 in API credits.[1]

No purchase necessary, void where prohibited. Must be at least 18 to enter. For contest details, see the Official Rules.

Entries can be submitted via the feedback form that is linked in the ChatGPT interface.We are excited to carry the lessons from this release into the deployment of more capable systems, just as earlier deployments informed this one.

- No purchase necessary, void where prohibited. Must be at least 18 to enter. For contest details, see the Official Rules. ↩︎

- Stiennon, Nisan, et al. “Learning to summarize with human feedback.” Advances in Neural Information Processing Systems 33 (2020): 3008-3021. ↩︎

- Gao, Leo, John Schulman, and Jacob Hilton. “Scaling Laws for Reward Model Overoptimization.” arXiv preprint arXiv:2210.10760 (2022). ↩︎

- The inspiration for this contest comes in part from work by Kenway, Josh, Camille François, Sasha Costanza-Chock, Inioluwa Deborah Raji, and Joy Buolamwini. Bug Bounties For Algorithmic Harms? Lessons from Cybersecurity Vulnerability Disclosure for Algorithmic Harms Discovery, Disclosure, and Redress. Washington, DC: Algorithmic Justice League. January 2022. Available at https://ajl.org/bugs. See also work by Brundage, Miles, Avin, Shahar, Wang, Jasmine, Belfield, Haydn, and Gretchen Krueger et al. “Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims,” April 2020. Available at https://arxiv.org/abs/2004.07213. See an earlier instance of such a competition at HackerOne. 2021b. “Twitter Algorithmic Bias.” HackerOne. https://hackerone.com/twitter-algorithmic-bias?type=team. Finally, see early published work on this topic from Rubinovitz, JB, “Bias Bounty Programs as a Method of Combatting Bias in AI,” August 2018. Available at https://rubinovitz.com/2018/08/01/bias-bounty-programs-as-a-method-of-combatting. ↩︎

Contributors: John Schulman, Barret Zoph, Christina Kim, Jacob Hilton, Jacob Menick, Jiayi Weng, Juan Felipe Ceron Uribe, Liam Fedus, Luke Metz, Michael Pokorny, Rapha Gontijo Lopes, Shengjia Zhao, Arun Vijayvergiya, Eric Sigler, Adam Perelman, Chelsea Voss, Mike Heaton, Joel Parish, Dave Cummings, Rajeev Nayak, Valerie Balcom, David Schnurr, Tomer Kaftan, Chris Hallacy, Nicholas Turley, Noah Deutsch, Vik Goel, Jonathan Ward, Aris Konstantinidis, Wojciech Zaremba, Long Ouyang, Leonard Bogdonoff, Joshua Gross, David Medina, Sarah Yoo, Teddy Lee, Ryan Lowe, Dan Mossing, Joost Huizinga, Roger Jiang, Carroll Wainwright, Diogo Almeida, Steph Lin, Marvin Zhang, Kai Xiao, Katarina Slama, Steven Bills, Alex Gray, Jan Leike, Jakub Pachocki, Phil Tillet, Shantanu Jain, Greg Brockman, Nick Ryder

'공부하기 > FUTURE industry-메타버스' 카테고리의 다른 글

| 2• Apple Car에는 어떤 기능이 있습니까? (0) | 2023.02.01 |

|---|---|

| 1• Apple iCar는 언제 출시됩니까? (0) | 2023.02.01 |

| 전기 자동차 배터리 관련 자료 (0) | 2023.01.17 |

| 영국 기가팩토리: Britishvolt, Northumberland 기가팩토리의 미래 확보를 위해 매각 추진 (0) | 2023.01.16 |

| 10. Rimac Nevera – (200만 유로/220만 달러) : 2023년 기준 가장 빠른 전기차는? (0) | 2023.01.16 |

댓글